Google has announced that the majority of websites need not worry about crawl budget constraints unless they surpass one million pages.

SEMRush

SEMrush is a fantastic SEO tool that can be used to carry out keyword research, including tracking the keyword strategy of your competitors.

However, there’s an important detail that could change how larger sites approach their web performance.

One-Million-Page Threshold Remains Unchanged

During a recent episode of the Search Off the Record podcast, Gary Illyes from Google’s Search Relations team reiterated the company’s longstanding stance on crawl budgets when asked about page limits.

Understanding the Threshold

Illyes emphasized the significance of the one-million-page mark, suggesting that sites under this limit are largely unaffected by crawl budget concerns.

“I would say 1 million is okay probably.”

This indicates that websites with fewer than a million pages can largely disregard issues related to crawl budgeting.

What’s notable is that this benchmark hasn’t changed since 2020, despite the web’s exponential growth.

The increase in JavaScript usage, dynamic content, and complex site architectures haven’t influenced Google’s set threshold.

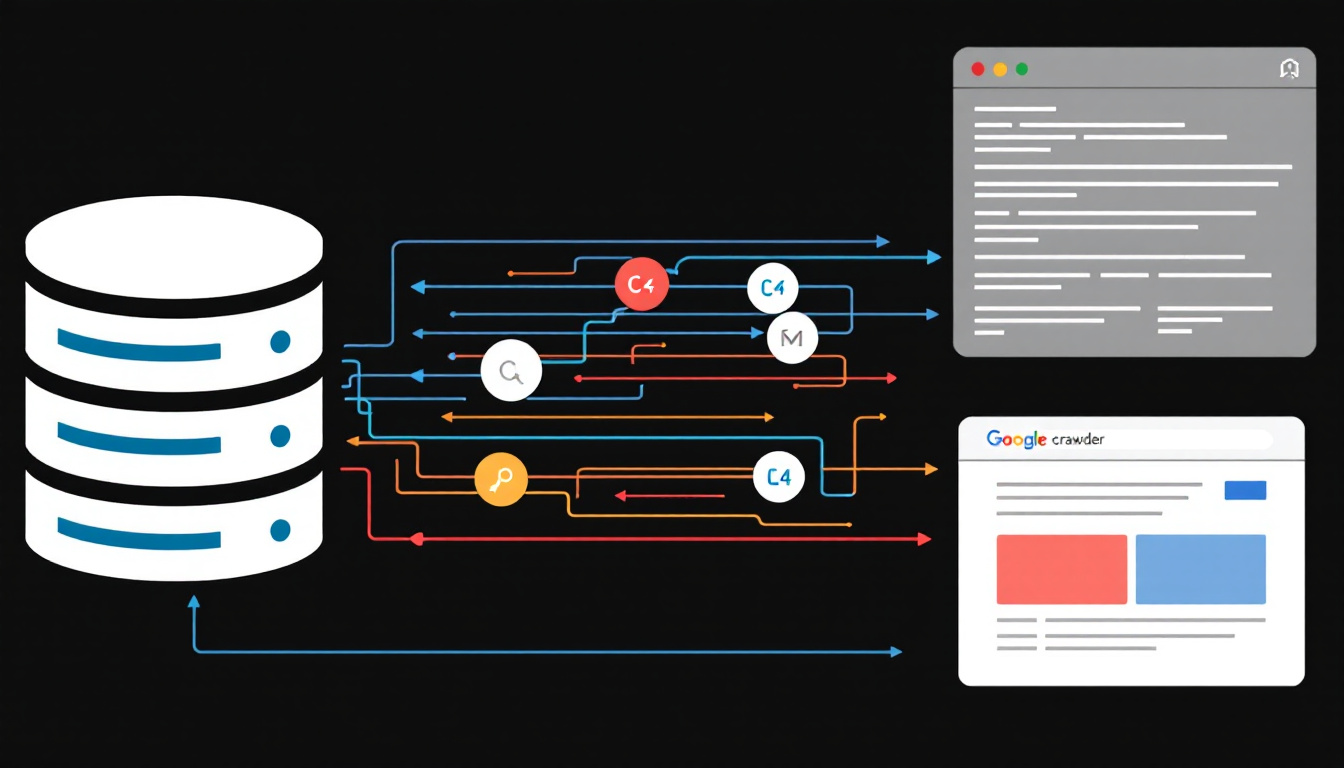

Database Speed Outweighs Page Quantity

In a surprising revelation, Illyes pointed out that the performance of a website’s database has a more significant impact on crawling efficiency than the sheer number of pages.

Implications for Large Sites

This insight suggests that even sites with a substantial number of pages can maintain good crawl rates if their databases operate swiftly.

“If you are making expensive database calls, that’s going to cost the server a lot.”

For instance, a site hosting 500,000 pages with sluggish database queries may encounter more crawling challenges compared to a site with 2 million fast-loading static pages.

This shift in focus means that optimizing database performance is now essential, particularly for websites with dynamic content or intricate query requirements.

Indexing Takes the Spotlight over Crawling

Illyes shared perspectives that challenge common assumptions within the SEO community.

Resource Allocation

He clarified that the primary resource drain isn’t the crawling process but the indexing and data processing that follows.

“It’s not crawling that is eating up the resources, it’s indexing and potentially serving or what you are doing with the data when you are processing that data.”

This means that efforts should be directed towards making content easier for Google to handle post-crawl rather than merely preventing crawling.

Historical Context of Crawl Budget

The podcast also provided a retrospective look at how crawl budgets have evolved over time.

Early Web Crawling

Illyes compared early web indexing processes to today’s expansive web environment.

In 1994, the World Wide Web Worm indexed just 110,000 pages, and WebCrawler handled 2 million.

Illyes referred to these numbers as quaint when contrasted with today’s vast web, illustrating why the one-million-page threshold has held steady.

Stability of the Crawl Budget Threshold

Google’s continuous efforts to manage its crawling efficiency were also discussed.

Balancing Efficiency and Features

Illyes explained the complexities involved in maintaining the crawl budget threshold amidst evolving technologies.

“You saved seven bytes from each request that you make and then this new product will add back eight.”

This highlights the ongoing challenge of enhancing efficiency while integrating new features, resulting in a stable crawl budget threshold despite infrastructure advancements.

Strategic Recommendations for Website Owners

Considering these insights, Illyes provided guidance on what website owners should focus on moving forward.

For Sites Under One Million Pages

These sites can continue their current practices without significant concern for crawl budget limitations.

Sites Under 1 Million Pages:

– Maintain current strategies with an emphasis on quality content and user experience.

– Crawl budget is not a primary concern.

For Larger Websites

Websites exceeding the one-million-page mark should prioritize optimizing their database systems.

Larger Sites:

– Improve database efficiency by:

– Reducing query execution times

– Enhancing caching mechanisms

– Speeding up dynamic content delivery

Universal Best Practices

Regardless of size, all websites should shift their focus towards optimizing the indexing process.

All Sites:

– Focus on indexing optimization instead of crawl prevention.

– Assist Google in processing content more effectively by:

– Enhancing database query performance

– Reducing server response times

– Optimizing content delivery and caching

Future Outlook

Looking ahead, Google’s consistent guidelines signal foundational SEO principles, while database performance becomes increasingly pivotal for larger websites.

Preparing for Tomorrow

Integrating database optimization into technical SEO is now more important than ever.

SEO professionals should incorporate database performance checks into their audits, and developers must focus on query optimization and caching strategies.

While the one-million-page threshold may stay the same in the coming years, sites that enhance their database capabilities now will be better positioned for future challenges.

Google’s enduring crawl budget guidance underscores that fundamental SEO practices remain relevant, but adds a new layer of emphasis on database efficiency for larger sites.

The Bottom Line

Google has reaffirmed that most websites don’t need to fret over crawl budgets unless they exceed one million pages.

The critical takeaway is the importance of database speed over page quantity, especially for larger sites.

By focusing on optimizing database performance and enhancing indexing processes, website owners can ensure efficient crawling and indexing by Google, maintaining their site’s health and visibility in search results.